This repository is part of the Thesis project for the Robotics and Autonomous Systems MSc in Lincoln University UK. The project is a ROS-based online transfer learning framework for human classification in 3D LiDAR scans, utilising a YOLO-based tracking system. The motivation behind this is that the size of the training data needed to train such classifiers should be considerably large. In addition annotating said data is a tedious work with high costs.

The main contributions of this work are:

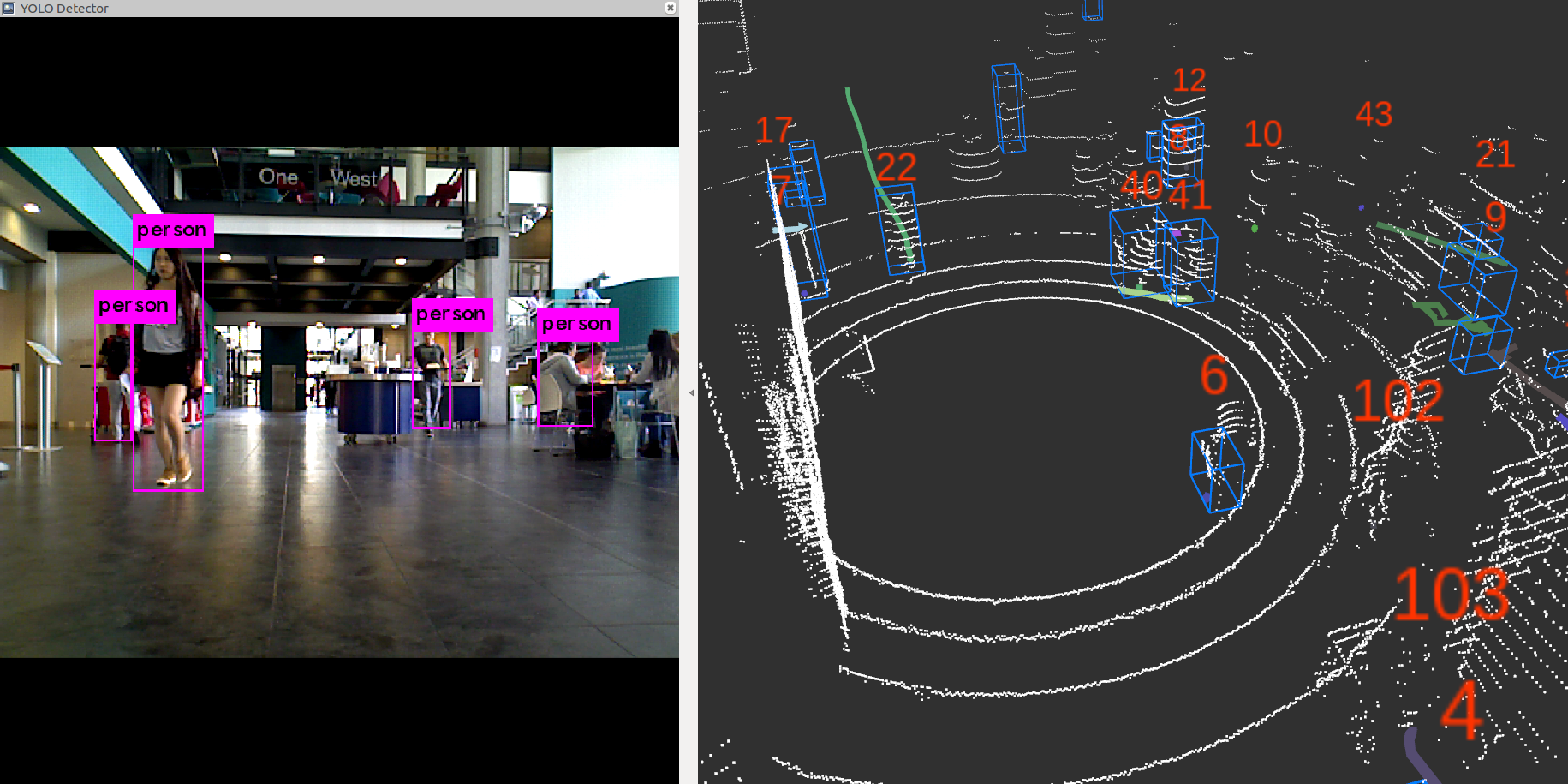

- Development and implementation of a YOLO tracker from previous work. The tracker produced a linear angle approximation between each person and the robot.

- The integration of the YOLO detector to the Bayesian tracking system (Work presented in Online learning for human classification in 3D LiDAR-based tracking)

- The evaluation of the new tracker and the trained classifier.

In order to develop this code the following were used:

- ROS

- YOLO: Real-Time Object Detection

- Online learning for human classification in 3D LiDAR-based tracking

Assuming ROS is installed in the system (if not follow instructions)

// Install prerequisite packages

$ sudo apt-get install ros-kinetic-velodyne*

$ sudo apt-get install ros-kinetic-bfl

$ cd catkin_ws/srcFor running the other trackers install the ros packages from here

// The core

$ git clone --recursive https://github.com/parisChatz/yolo-online-learning.git

// Build

$ cd catkin_ws

$ catkin_make -DCMAKE_BUILD_TYPE=Release

In order to test the Yolo tracker with a simple rosbag (with camera topic) first be sure to change the robot camera topic if needed in

- /darknet_ros/darknet_ros/config/ros.yaml

- /people_yolo_angle_package/scripts/people_position.py

Then do:

$ roslaunch people_yolo_angle_package testing.launch bag:="/dir_to_rosbag/example_rosbag.bag"

For executing the whole code:

roslaunch people_yolo_angle_package object3d_yolo.launch

This will open the rosbag mentioned in the launchfile as well as the whole system environment including RVIZ.

The results showed decreased performance in the case of the YOLO detector. When YOLO was paired with other detectors the performance seems to increase.

It was also shown that the shortcomings of

the YOLO tracker do not solemnly lie in the human

detection.

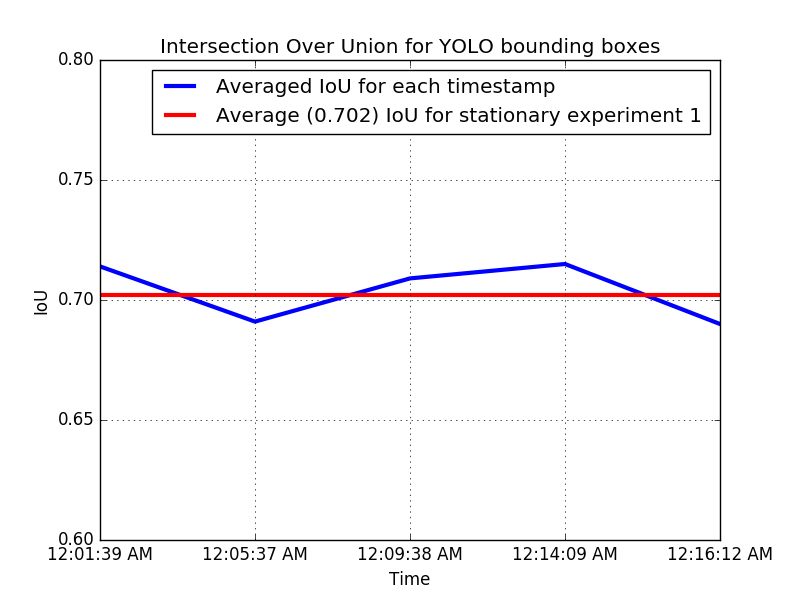

A comparison was made of the system that uses data from the Yolo tracker and ground truth data which was inputed in the system in the same way as Yolo produces data. The results are the following.

The evaluation on the bounding boxes of YOLO showed that YOLO detects bigger bounding boxes for moving people but the weakest point of the system is rather in the linear approximation of the angle that was calculated.

- Improvement of the angle approximation of Yolo tracker

- Training/testing the system with bigger data