These are some code samples from various research projects, side projects and competitions.

Implementation of the "Variational Sparse Coding" paper as part of the ICLR 2019 Reproducibility Challenge. [code]

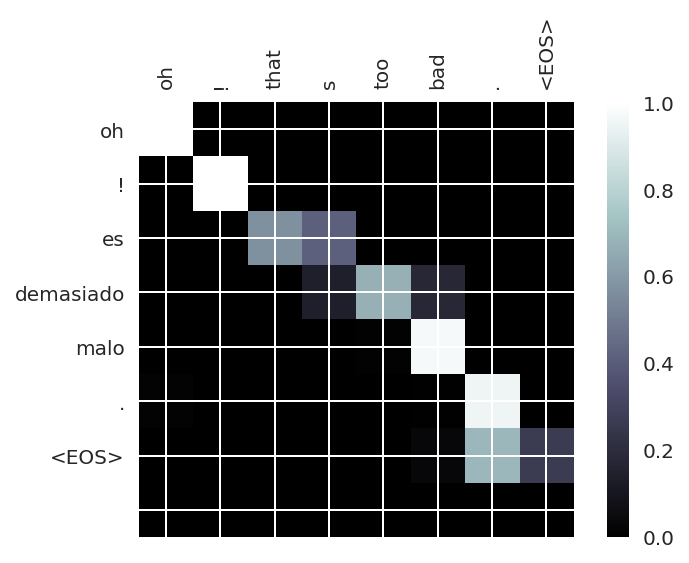

Sequence-to-sequence recurrent neural network (bidirectional LSTM) with Global Attention (Luong et al., 2015) and Beam Search implemented in PyTorch. ~41 BLEU in 110K-sentences English-Spanish corpus.

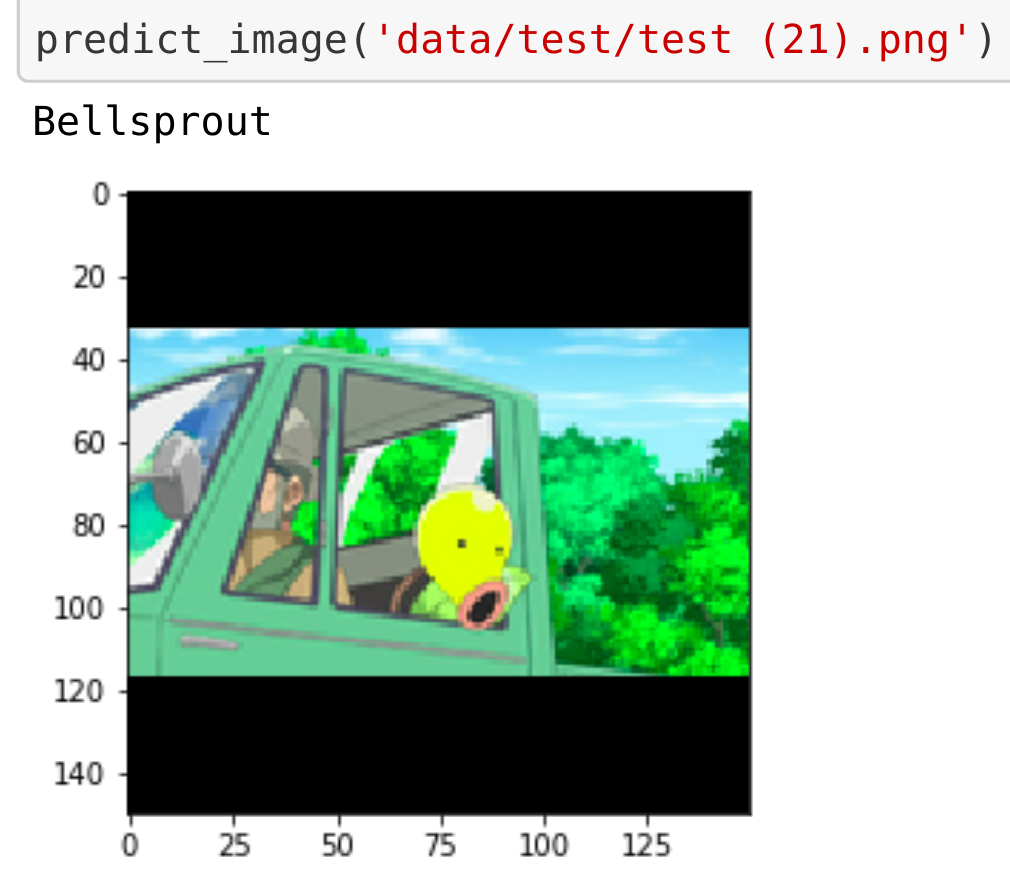

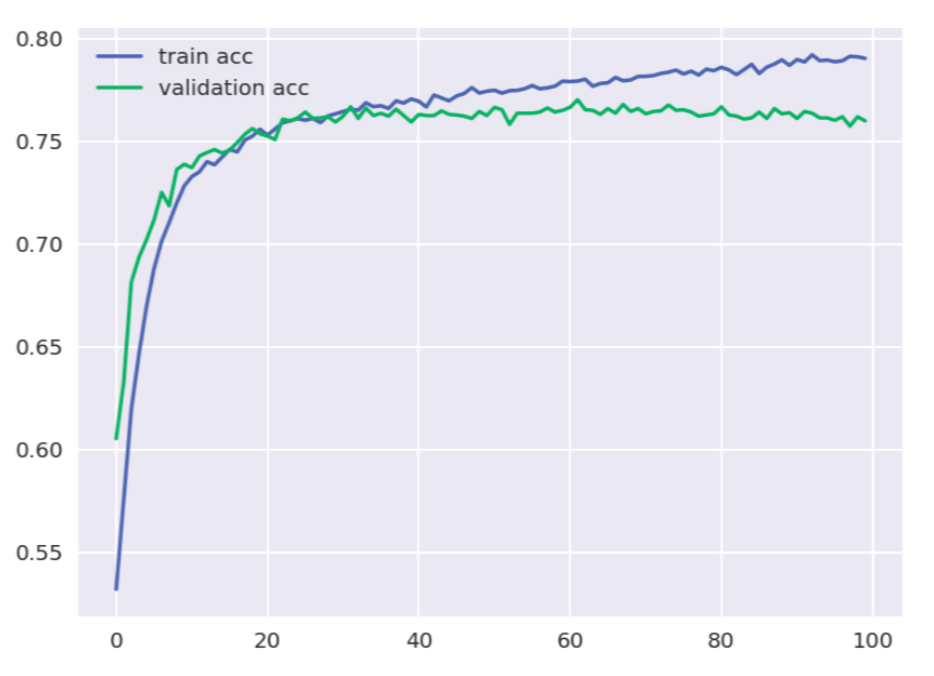

Pokémon image classification with transfer learning from ImageNet-pretrained MobileNet convolutional neural network (Howard et al., 2017). ~82% accuracy with 27 classes and 3.8K web-scraped images. Deployed in Flask with React JS for the SPA user interface. Presented at Infosoft 2017 and Hack Faire 2017. [slides] [demo]

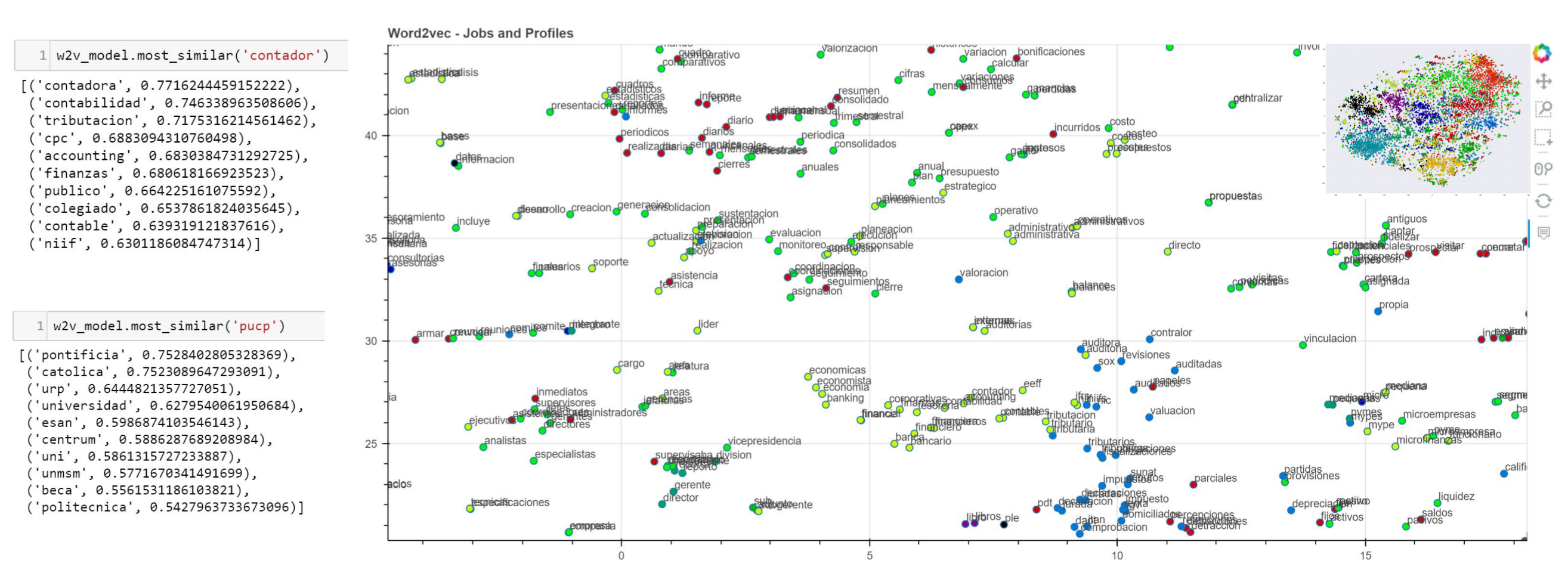

Information retrieval system between job descriptions and applicant profiles textual description matching based on Word2Vec and Doc2Vec (Le & Mikolov, 2014) semantic search and string matching algorithms for out-of-vocabulary misspelled words, constructed over an inverted index for efficient look-up. Presented at WAIMLAp 2017 and Hack Faire 2017. [poster]

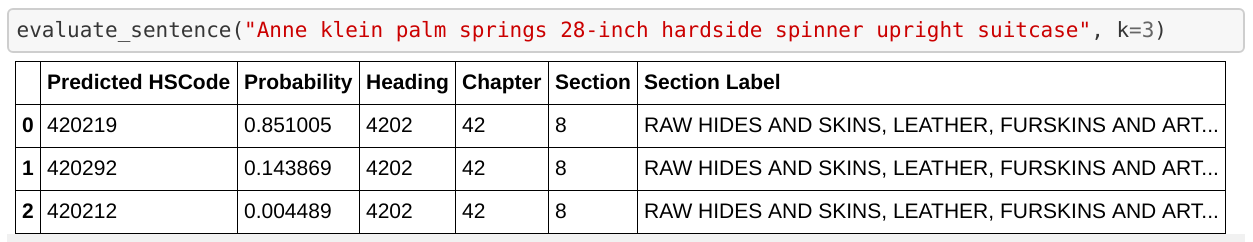

Commodity description classification using recurrent neural networks (bidirectional LSTM) implemented in PyTorch with FastText pretrained word embeddings (Joulin et al., 2016). ~92% top-5 accuracy with 3762 classes and 30.6M text descriptions.

Convolutional neural networks architecture experimentation for genomic sequence pair binary classification with high imbalance (0.07% positive classes). ~78.5 F1-Score for ~200k pairs of sequences.

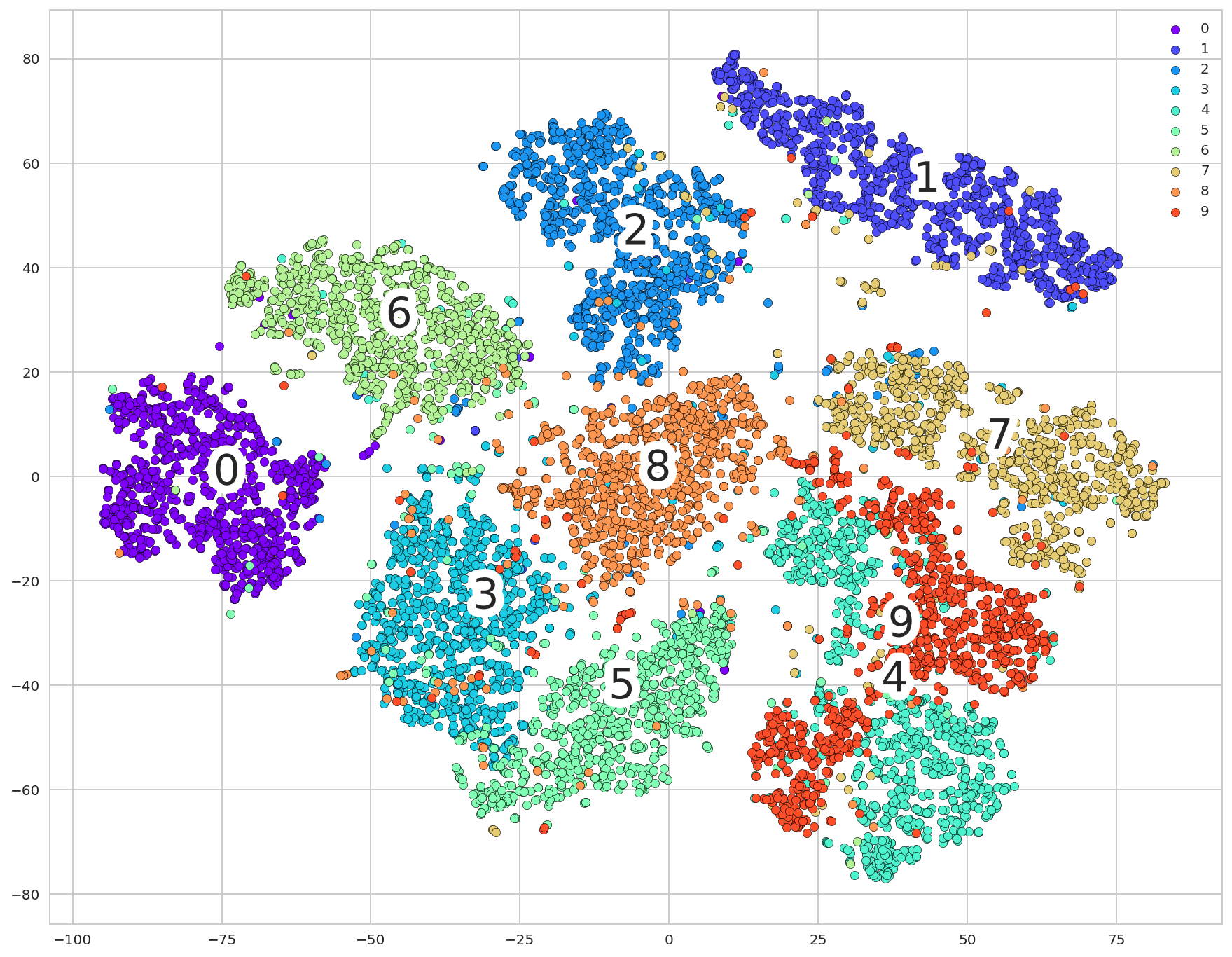

Fully-connected autoencoder for MNIST dataset with a bottleneck of size 20 implemented in PyTorch, based on DeepBayes 2018 practical assignment. 0.00069 L2 reconstruction loss + L1 regularization loss. t-SNE dimensionality reduction for bottleneck features visualization.

Main preprocessing and main cross validation loop with LightGBM

LSTM conditioned on a structured embedding network implemented in PyTorch (refactored version)

LSTM conditioned on a structured embedding network implemented in PyTorch

- BBVA Challenge 2017

- DrivenData Competition

- Interbank Datathon

- Kaggle Bulldozers Competition

- Kaggle Homesite Insurance Competition

- Kaggle NYC Taxi Trip Duration Competition

- CIFAR-10 with CNNs (Tensorflow)

- CelebA Image Generation with DCGANs (Tensorflow)

- Dogs vs Cats with CNNs + Transfer Learning (Keras)

- English-French Machine Translation with Seq2seq RNNs (Tensorflow)

- The Simpons TV Scripts Text Generation with LSTM RNNs (Tensorflow)

- LibriSpeech Speech Recognition with GRU RNNs and CNNs (Keras)