Boundary Detection by Determining the Difference of Classification Probabilities of Sequences: Topic Segmentation

Topic segmentation plays an important role in the process of information extraction. It could be used to automatically split large articles into small segments with desired topics. The accumulated confidence scores of each sequence from text, predicted by a specific classification model, show significant feature. Let's take a look at the following example.

The following is a short clinical notes which has been tokenized into 23 sequences. (This short clinical notes is quoted from I2B2- NLP Dataset. For detail information please visit: https://www.i2b2.org/)

1). History of present illness

2). This is a 54-year-old female with a history of cardiomyopathy, hypertension, diabetes……

3). The patient then reportedly went into VFib and was shocked once by EMS …….

4). She was intubated, received amiodarone and dopamine, as her BP ……

5). In the ED, a portable chest x-ray revealed diffuse bilateral ……

6). Pt was transferred to the ICU for further management.

7). Of note, she was recently hospitalized at Somver Vasky University Of ……

8). She was then asymptomatic at that time.

9). A fistulogram and angioplasty of her right AV fistula was performed on……

10). She has since received dialysis treatments with no complication.

-------------------------------------------------------------------------------------------------------

11). Home medications

12). At the time of admission include amitriptyline 25 mg p.o. bedtime

13). enteric-coated aspirin 325 mg p.o. daily

14). enalapril 20 mg p.o. b.i.d.,

15). Lasix 200 mg p.o. b.i.d.,

16). Losartan 50 mg p.o. daily,

17). Toprol-XL 200 mg p.o. b.i.d.,

18). Advair Diskus 250/50 one puff inhaler b.i.d.,

19). insulin NPH 50 units q.a.m. subcu and 25 units q.p.m. subcu,

20). insulin lispro 18 units subcu at dinner time,

21). Protonix 40 mg p.o. daily,

22). sevelamer 1200 mg p.o. t.i.d.,

23). tramadol 25 mg p.o. q.6 h. p.r.n. pain.

Suppose we want to split a clinical note into five categories: History, Medications, Hospital Course, Laboratories and Physical Examinations, which are the most well-known topics in Electrical Medical Records (EMR) that is the systematized collection of patient and population electronically-stored health information in a digital format. We applied a pre-trained classifier for predicting confidence scores (the probabilities of belonging to each topic) of each sequence and assigning it with a score vector .

After obtaining all the score vectors from all sequences, we could calculate the accumulated confidence score of the location of current sequence by simply adding current sequence’s score with its all previous sequences’ score.

Formulaically:

Pre-trained classifier models respectively assign with a 5-dimensional vector

and obtain a t-dimensional accumulated score vector

. Each element in vector ρ could be obtained by using:

.

Where:

: class

: the

tokenized sentence or sequence s;

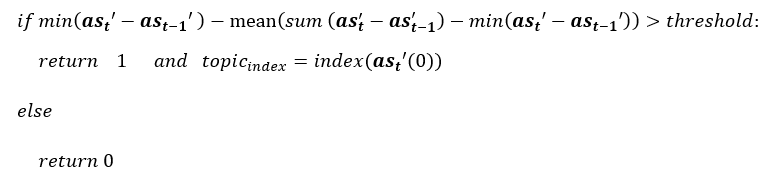

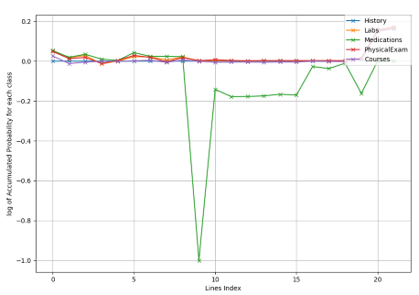

The following two pictures show the accumulated score () of probability in vector

obtained using the classifiers based on NB (left) and SVM (right) models. Vertical axis represents the score of the vector 𝐯 while horizontal axis refer to the location of each sequence.

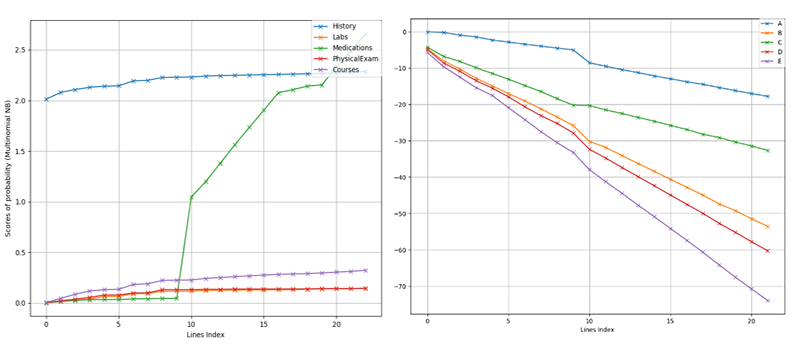

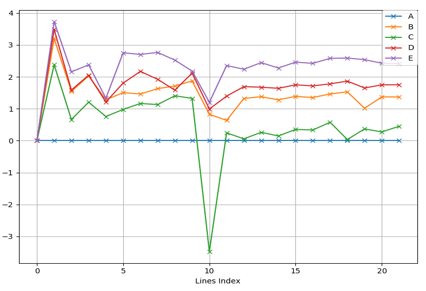

Let's take a close look at the variety of these five scores through initializing each accumulated score vector by being subtracted by the maximum value in the vector:

A new vector would be obtained. The following shows

based on on NB (left) and SVM (right) models.

Then take a backward difference of the former formula. As for the vector ρ_initial, it could be the following:

Finally, it could be seen as follow:

It is clear to see that there is a sharp change at the postion of sequence 10 where is the ground truth boundary.

Topic score predictor could give each sequence or sentence scores five score each of which is the probability of belonging to corresponding topic. Finally, the segmenter could predict tokenized sequences’ () probability

of belonging to each category

for boundary detection by determining the difference of

with respect to

, while

represents

tokenized sequences.

In this project, Naive Bayes and Linear SVM models with features of BOW are mainly emploied for training Topic Score Predictor. Other types of classification models might also work.

- Tokenize text

into n (number of tokenized sentences) sentences

;

- Let

;

- Topic score pretictor respectively assigns each sequence score and obtains vector

- Analyzing vector

,

If return 0, let,

If,

would be a segment;

Else, go back to step 3;

If return 1 and,

. In other words,

is a segment which discusses about

. Simultaneously, let

and go back to step 1 to segment the rest text;

- Segmentation finished.

The idea of analyzing idea is to detect the variety of each topic score with the detected sequences location changing for boundary detection.

Based on the example above, it is obvious to see that analyzing the vector : \

The best threshold currently tested is 0.3 for NB-based topic score predictor and for SVM-based predictor.

Class TopicSeg.topic_seg.segmenter(predictor_model='nb', dataset_file="../Datasets/LabeledDataset.txt",labels_dic, threshold=0.3)

- predictor_model: String, optional(default='nb')

- Classification model for Topic Score Predictor

- dataset_file: String, optional(dafault="../Datasets/LabeledDataset.txt")

- Directory of the labelled dataset. This parameter should be changed for different segmentation tasks. The default directory here is used to achieve topic segmentation of clinical notes.

- labels_dic: dictionary, optional(default={'A':'History','B':'Labs','C':'Medications','D':'PhysicalExam','E':'Courses'})

- Labels used in the dataset. This parameter should be changed for different segmentation tasks. The default directory here is used to achieve topic segmentation of clinical notes.

- threshold:float, optional(default=0.3,range=[0,1])

- get_Boundary_Position(notelines): Get all the boundaries and corresponding topic labels sequence.

- Parameter: notelines: the list of text to be segmented.

- Return: List of detected boundaries and topic labels.

- get_Seg_index(notelines): Get all the boundaries' index.

- Parameter: notelines: the list of text to be segmented.

- Return: List of boundaries' index.

- print_Segs(notelines): Print out the segmented text.

- Parameter: notelines: the list of text to be segmented.

>>> from TopicSeg.topic_seg import segmenter

>>> mysegmenter=nbsegmenter()

>>> boudanry_postion=mysegmenter.get_Boundary_Position(notelines)

>>> print(boudanry_postion)

>>> ['A', 'A', 'A', 'A', 'A', 'A', 'A', 'A', 'C', 'C', 'D', 'D', 'D', 'D', 'D', 'E', 'E', 'E', 'E']

>>> boudanry_index=mysegmenter.get_Seg_index(notelines)

>>> print(boudanry_index)

>>> [['A',8],['C',2],['D',5],['E',4]]

>>> mysegmenter.print_Segs(nontelines)

>>> ========History==========

>>> history of present illness ...

>>> ========Medications==========

>>> The medications ....Ruan, Wei, and Won-sook Lee. "Boundary Detection by Determining the Difference of Classification Probabilities of Sequences: Topic Segmentation of Clinical Notes." 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). IEEE, 2018.

Ruan, Wei, et al. "Pictorial Visualization of EMR Summary Interface and Medical Information Extraction of Clinical Notes." 2018 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA). IEEE, 2018.

Fournier, C., & Inkpen, D. (2012, June). Segmentation similarity and agreement. In Proceedings of the 2012 conference of the North American chapter of the association for computational linguistics: Human language technologies (pp. 152-161). Association for Computational Linguistics.

Fournier,C.(2012). Segmentation Evaluation using SegEval: https://segeval.readthedocs.io/en/latest/#fournierinkpen2012