Autonomous and cooperative design of the monitor positions for a team of UAVs to maximize the quantity and quality of detected objects

This project deals with the problem of positioning a swarm of UAVs inside a completely unknown terrain, having as objective to maximize the overall situational awareness.

For full description and performance analysis, please check out our companion paper.

AirSim platform was utilized to evaluate the perfmance of the swarm.

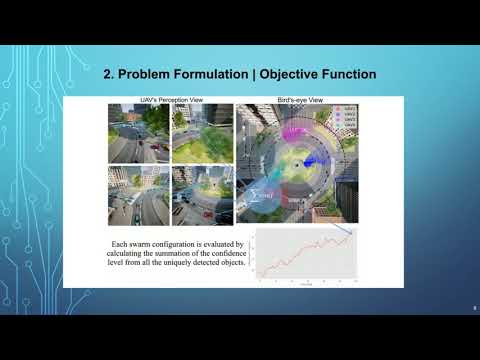

The implemented algorithm is not specifically tailored to the dynamics of either UAVs or the environment, instead, it learns, from the real-time images, exactly the most effective formations of the swarm for the underlying monitoring task. Moreover, and to be able to evaluate at each iteration the swarm formation, images from the UAVs are fed to a novel computation scheme that assigns a single scalar score, taking into consideration the number and quality of all unique objects of interest.

The following video is a presentation of our work

The ConvCAO_AirSim repository contains the following applications:

- appConvergence: Positioning a swarm of UAVs inside a completely unknown terrain, having as objective to maximize the overall situational awareness.

- appExhaustiveSearch: Centralized, semi-exhaustive methodology.

- appLinux: Implementation for working on Linux OS.

- appNavigate: Navigating a UAV swarm on a predetermined path.

This section provides a step-by-step guide for installing the ConvCAO_AirSim framework.

First, install the required system packages (NOTE: the majority of the experiments were conducted in a conda enviroment, therefore we stongly advise you to download and install a conda virtual enviroment):

$ pip install airsim Shapely descartes opencv-contrib-python=4.1.26

Second, you have to define a detector capable of producing bounding boxes of objects along with the corresponding confidences levels from RGB images.

For the needs of our application we utilized YOLOv3 detector, trained on the COCO dataset. You can download this detector from here. After downloading the file, extract the yolo-coco folder inside your local ConvCao_AirSim folder.

It is worth highlighting that, you could use a deifferent detector (tailored to the application needs), as the proposed methodology is agnostic as far the detector's choise is concerned.

Download any of the available AirSim Enviroments

To run an example with the Convergence testbed you need to just replace the "detector-path" entry - inside this file - with your path to the previsously downloaded detector.

Finally run the "MultiAgent.py" script:

$ python MultiAgent.py

Detailed instructions for running specific applications are inside every corresponding app folder

Combining the information extracted from the Depth Image and the focal length of the camera we can recreate the 3D percepective for each UAV

Combining the aforementioned 3D reconstruction of each UAV we can generate the a point cloud for the whole enviroment

@article{koutras2020autonomous,

title={Autonomous and cooperative design of the monitor positions for a team of UAVs to maximize the quantity and quality of detected objects},

author={Koutras, Dimitrios I and Kapoutsis, Athanasios Ch and Kosmatopoulos, Elias B},

journal={IEEE Robotics and Automation Letters},

volume={5},

number={3},

pages={4986--4993},

year={2020},

publisher={IEEE}

}