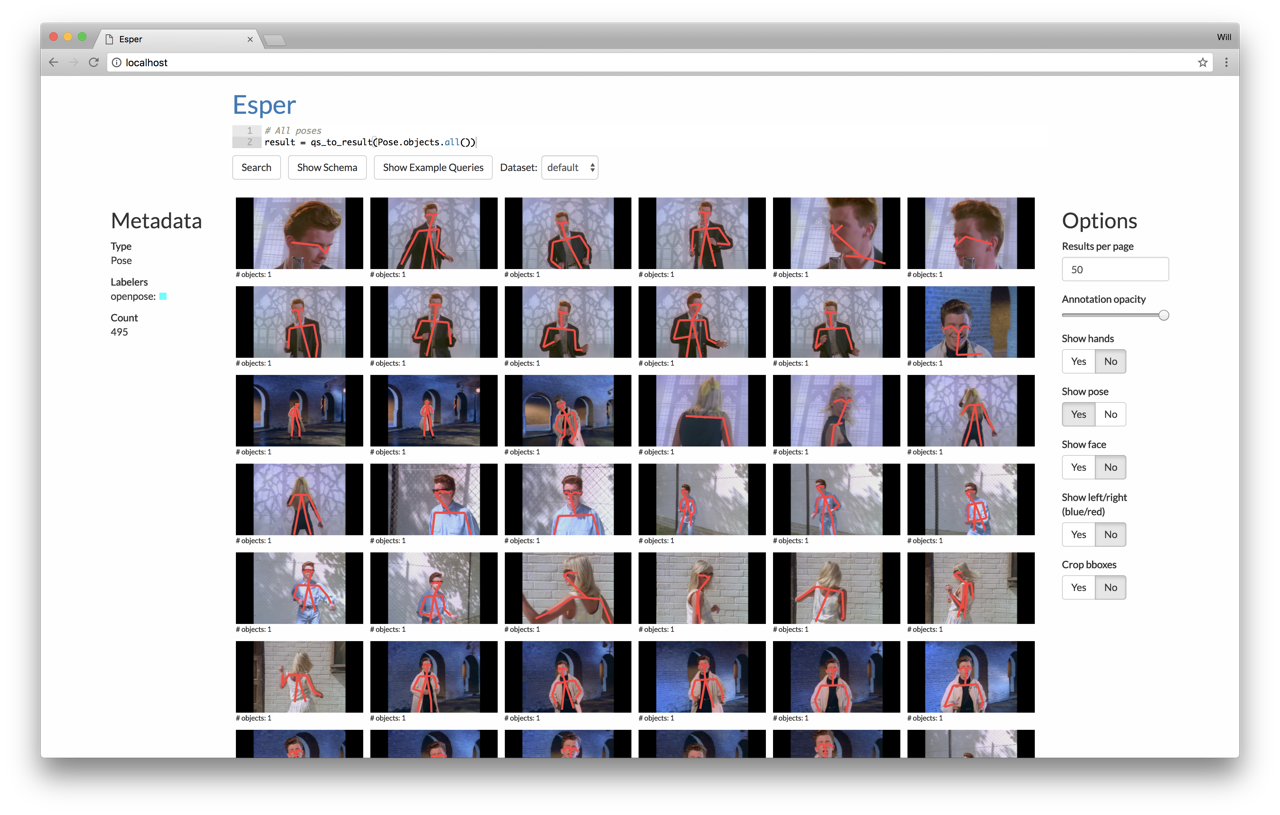

Esper is a framework for exploratory analysis of large video collections. Esper takes as input set of videos and a database of metadata about the videos (e.g. bounding boxes, poses, tracks). Esper provides a web UI (shown below) and a programmatic interface (Jupyter notebook) for visualizing and analyzing this metadata. Computer vision researchers may find Esper a useful tool for understanding and debugging the accuracy of their trained models.

First, install Docker, Python 2.7, and pip.

If you have a GPU and are running on Linux, then install nvidia-docker. For any command below that uses docker-compose, use nvidia-docker-compose instead.

Next, you will need to configure your Esper installation. If you are using Google Cloud, follow the instructions in Getting started with Google Cloud and replace local.toml with google.toml below. Otherwise, edit any relevant configuration values in config/local.toml. Then run:

git clone https://github.com/scanner-research/esper

cd esper

echo -e '\nalias dc=docker-compose' >> $HOME/.profile && source $HOME/.profile

pip install -r requirements.txt

python configure.py --config config/local.toml --dataset default

dc pull

dc up -d

dc exec app ./scripts/setup.sh

You have successfully setup Esper! Visit http://localhost (or whatever server you're running this on) to see the frontend. You will see a query interface, but we can't do anything with it until we get some data. Go through the Demo below to visualize some sample videos and metadata we have provided.

-

Cannot connect to the Docker daemon: make sure that Docker is actually running (e.g.

docker psshould not fail). On Linux, make sure you have non-sudo permissions (runsudo adduser $USER docker). On OS X, make sure the Docker application is open (should see a whale in your icon tray). -

Permissions errors with pip: either run pip with

sudoor consider using a virtualenv. -

sh: 0: getcwd() failed: No such file or directory: please file an issue w/ reproducible steps if this happens. Should only occur on OS X.

We have premade a sample database of frames and annotations (faces and poses) for this video. This demo will have you load this database into your local copy of Esper and run a few example queries against it.

First, enter the Esper application container with dc exec app bash. Then run:

wget https://storage.googleapis.com/esper/example-dataset.tar.gz

tar -xf example-dataset.tar.gz

esper-run query/datasets/default/import.py

Then visit http://localhost to see the web UI. A query has been pre-filled in the search box at the top--click "Search" to see the results, in this case to show all the detected faces in the video. Click "Show example queries" to see and run more examples.

Next, check out the Jupyter programming environment by visiting http://localhost:8888/notebooks/notebooks/example.ipynb. To log into the Jupyter notebook, get the token by running ./scripts/jupyter-token.sh outside the container.

TODO(wcrichto): getting started with your own dataset