Ditto is a framework which allows you to do transformations to an Airflow DAG, to convert it into another DAG which is flow-isomorphic with the original DAG. i.e. it will orchestrate a flow of operators which yields the same results, but was just transformed to run in another environment or platform. The framework was built to transform EMR DAGs to run on Azure HDInsight, but you can extend the rich API for any other kind of transformation. In fact you can transform DAGs such that the result is not isomorphic too if you want (although at that point you're better off writing a whole new DAG).

The purpose of the framework is to allow you to maintain one codebase and be able to run your airflow DAGs on different execution environments (e.g. on different clouds, or even different container frameworks - spark on YARN vs kubernetes). It is not meant for a one-time transformation, but for continuous and parallel DAG deployments, although you can use it for that purpose too.

At the heart, Ditto is a graph manipulation library, with extendable APIs for the actual transformation logic. It does come with out of the box support for EMR to HDInsight transformation though.

pip install airflow-ditto

Ditto is created for conveniently transforming a large number of DAGs which follow a similar pattern quickly. Here's how easy it is to use Ditto:

ditto = ditto.AirflowDagTransformer(DAG(

dag_id='transformed_dag',

default_args=DEFAULT_ARGS

), transformer_resolvers=[

AncestralClassTransformerResolver(

{

EmrCreateJobFlowOperator: EmrCreateJobFlowOperatorTransformer,

EmrJobFlowSensor: EmrJobFlowSensorTransformer,

EmrAddStepsOperator: EmrAddStepsOperatorTransformer,

EmrStepSensor: EmrStepSensorTransformer,

EmrTerminateJobFlowOperator: EmrTerminateJobFlowOperatorTransformer,

S3KeySensor: S3KeySensorBlobOperatorTransformer

}

)], transformer_defaults=TransformerDefaultsConf({

EmrCreateJobFlowOperatorTransformer: TransformerDefaults(

default_operator= hdi_create_cluster_op

)}))

ditto.transform(emr_dag)or just...

hdi_dag = ditto.templates.EmrHdiDagTransformerTemplate(DAG(

dag_id='transformed_dag',

default_args=DEFAULT_ARGS

), transformer_defaults=TransformerDefaultsConf({

EmrCreateJobFlowOperatorTransformer: TransformerDefaults(

default_operator=hdi_create_cluster_op

)})).transform(emr_dag)You can put the above call in any python file which is visible to airflow and the resultant dag loads up thanks to how airflow's dagbag finds DAGs.

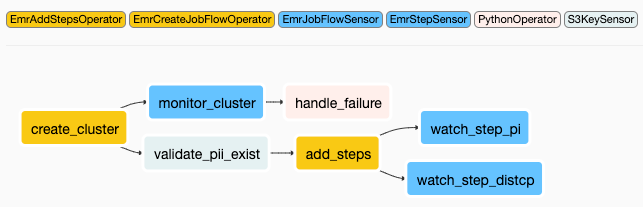

Source DAG (airflow view)

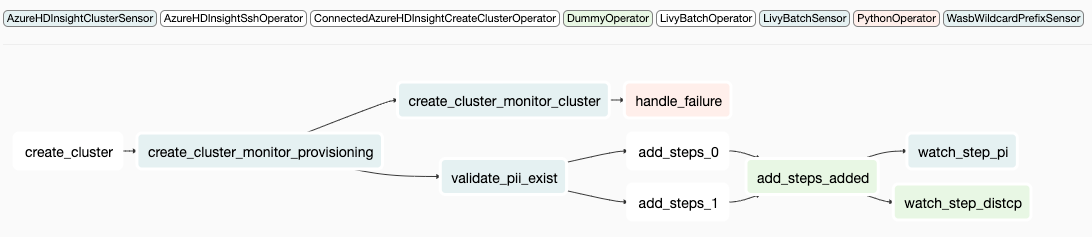

Transformed DAG

Read the detailed documentation here