Colorization is the process of the addition of color to a black and white video or image. A grayscale image is a scalar but A colored image is a vector-valued function often represented as three separate channels. So, the colorization process requires mapping of a scalar to a vector (r,g,b) valued function which has no unique solution. Simply put, the mission of this project is to Bring new life to old photos and movies.

1.How to Start The Project?

2.Shot transition detection

3.Model Architcture

4.Color Propagation

5.Results

6.License

- Numpy

- Opencv

- PIL

- Keras

- moviepy

note you can view dependencies.txt for more details

python argv[0] argv[1] argv[2] argv[3]

argv[0] = main.py

argv[1] = 0 => Image Colorization , 1 : Video Colorization

argv[2] = 0 => Human model , 1 : Nature model

argv[3] = File name

We divide the movie into shots by using *Sum of absolute differences* (SAD).

This is both the most obvious and most simple algorithm of all: The two consecutive grayscale frames are compared pixel by pixel, summing up the absolute values of the differences of each two corresponding pixels.

The generator part of a GAN learns to create fake data by incorporating feedback from the discriminator. It learns to make the discriminator classify its output as real. it also requires random input, generator network, which transforms the random input into a data instance, discriminator network, which classifies the generated data discriminator output, generator loss, which penalizes the generator for failing to fool the discriminator The generator can be broken down into two pieces: the encoder and decoder

The discriminator is a much simpler model than its counterpart, the generator because it’s a standard Convolutional Neural Network (CNN) that is used to predict whether the RGB channels are real or fake. It has eight 2-stride convolutional layers each which consists of dropout, leaky relu activation, and, except for the first layer, batch normalization

Color Propagation is used to propagate the color of each contour in the keyframe( the frame which colorized by the generator model) to other video frames of the same shot by comparing each contour in each consecutive frame after converting the pre colorized frame to LAB.

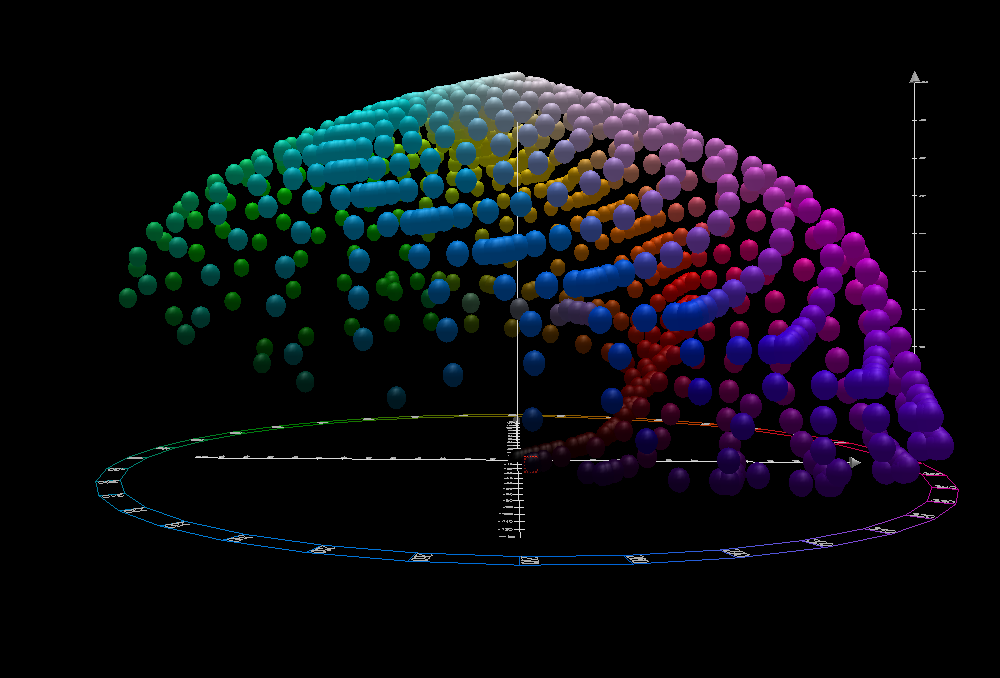

The first step was converting the images from their standard RGB color channels into CIE-LAB where the 3 new channels consist of:

- L - Represents the white to black trade off of the pixels

- A - Represents the red to green trade off of the pixels

- B - Represents the blue to yellow trade off of the pixels

Colorization of the Nature