def test_assign_arrays_raise_error():

foo = Embedding("foo", [0.1, 0.3, 0.10])

bar = Embedding("bar", [0.7, 0.2, 0.11])

emb = EmbeddingSet(foo, bar)

with pytest.raises(ValueError):

emb_with_property = emb.assign(prop_a=["a", "b"],

prop_b=np.array([1, 2, 3]))

def transform(self, embset: EmbeddingSet) -> EmbeddingSet:

names, X = embset.to_names_X()

if not self.is_fitted:

self.tfm.fit(X)

new_vecs = self.tfm.transform(X)

new_dict = new_embedding_dict(names, new_vecs, embset)

return EmbeddingSet(new_dict, name=f"{embset.name}.{self.name}()")

def test_assign_literal_values():

foo = Embedding("foo", [0.1, 0.3, 0.10])

bar = Embedding("bar", [0.7, 0.2, 0.11])

emb = EmbeddingSet(foo, bar)

emb_with_property = emb.assign(prop_a="prop-one", prop_b=1)

assert all([e.prop_a == "prop-one" for e in emb_with_property])

assert all([e.prop_b == 1 for e in emb_with_property])

def test_assign():

foo = Embedding("foo", [0.1, 0.3, 0.10])

bar = Embedding("bar", [0.7, 0.2, 0.11])

emb = EmbeddingSet(foo, bar)

emb_with_property = emb.assign(prop_a=lambda d: "prop-one",

prop_b=lambda d: "prop-two")

assert all([e.prop_a == "prop-one" for e in emb_with_property])

assert all([e.prop_b == "prop-two" for e in emb_with_property])

def test_assign_arrays():

foo = Embedding("foo", [0.1, 0.3, 0.10])

bar = Embedding("bar", [0.7, 0.2, 0.11])

emb = EmbeddingSet(foo, bar)

emb_with_property = emb.assign(prop_a=["a", "b"], prop_b=np.array([1, 2]))

assert emb_with_property["foo"].prop_a == "a"

assert emb_with_property["bar"].prop_a == "b"

assert emb_with_property["foo"].prop_b == 1

assert emb_with_property["bar"].prop_b == 2

def test_to_x_y():

foo = Embedding("foo", [0.1, 0.3])

bar = Embedding("bar", [0.7, 0.2])

buz = Embedding("buz", [0.1, 0.9])

bla = Embedding("bla", [0.2, 0.8])

emb1 = EmbeddingSet(foo, bar).add_property("label", lambda d: 'group-one')

emb2 = EmbeddingSet(buz, bla).add_property("label", lambda d: 'group-two')

emb = emb1.merge(emb2)

X, y = emb.to_X_y(y_label='label')

assert X.shape == emb.to_X().shape == (4, 2)

assert list(y) == ['group-one', 'group-one', 'group-two', 'group-two']

def test_embeddingset_creation():

foo = Embedding("foo", [0, 1])

bar = Embedding("bar", [1, 1])

emb = EmbeddingSet(foo)

assert len(emb) == 1

assert "foo" in emb

emb = EmbeddingSet(foo, bar)

assert len(emb) == 2

assert "foo" in emb

assert "bar" in emb

emb = EmbeddingSet({"foo": foo})

assert len(emb) == 1

assert "foo" in emb

def test_from_names_X():

names = ["foo", "bar", "buz"]

X = [

[1.0, 2],

[3, 4.0],

[0.5, 0.6],

]

embset = EmbeddingSet.from_names_X(names, X)

assert "foo" in embset

assert len(embset) == 3

assert np.array_equal(embset.to_X(), np.array(X))

names = names[:2]

with pytest.raises(ValueError, match="The number of given names"):

EmbeddingSet.from_names_X(names, X)

def transform(self, embset):

names, X = embset.to_names_X()

new_vecs = np.array(self.emb.transform(X))

names_out = names + [f"tsne_{i}" for i in range(self.n_components)]

vectors_out = np.concatenate([new_vecs, np.eye(self.n_components)])

new_dict = new_embedding_dict(names_out, vectors_out, embset)

return EmbeddingSet(new_dict, name=f"{embset.name}.tsne_{self.n_components}()")

def fit(self, embset: EmbeddingSet) -> "SklearnTransformer":

if not self.is_fitted:

# This is a bit of an anti-pattern. You should not need to `self.tfm`. However, there are

# some packages like OpenTSNE that return a different kind of estimator once an estimator has been fitted.

self.tfm = self.tfm.fit(embset.to_X())

self.is_fitted = True

return self

def embset():

names = ["red", "blue", "green", "yellow", "white"]

vectors = np.random.rand(5, 4) * 10 - 5

embeddings = [

Embedding(name, vector) for name, vector in zip(names, vectors)

]

return EmbeddingSet(*embeddings)

def embset_similar(

self,

emb: Union[str, Embedding],

n: int = 10,

lower=False,

metric="cosine",

) -> EmbeddingSet:

"""

Retreive an [EmbeddingSet][whatlies.embeddingset.EmbeddingSet] that are the most similar to the passed query.

Arguments:

emb: query to use

n: the number of items you'd like to see returned

metric: metric to use to calculate distance, must be scipy or sklearn compatible

lower: only fetch lower case tokens

Important:

This method is incredibly slow at the moment without a good `top_n` setting due to

[this bug](https://github.com/facebookresearch/fastText/issues/1040).

Returns:

An [EmbeddingSet][whatlies.embeddingset.EmbeddingSet] containing the similar embeddings.

"""

embs = [

w[0] for w in self.score_similar(

emb=emb, n=n, lower=lower, metric=metric)

]

return EmbeddingSet({w.name: w for w in embs})

def transform(self, embset):

names, X = embset_to_X(embset=embset)

new_vecs = self.tfm.transform(X)

names_out = names + [f"pca_{i}" for i in range(self.n_components)]

vectors_out = np.concatenate([new_vecs, np.eye(self.n_components)])

new_dict = new_embedding_dict(names_out, vectors_out, embset)

return EmbeddingSet(new_dict,

name=f"{embset.name}.pca_{self.n_components}()")

def transform(self, embset):

names, X = embset.to_names_X()

with warnings.catch_warnings():

warnings.simplefilter("ignore", category=NumbaPerformanceWarning)

new_vecs = self.tfm.transform(X)

new_dict = new_embedding_dict(names, new_vecs, embset)

return EmbeddingSet(new_dict,

name=f"{embset.name}.umap_{self.n_components}()")

def transform(self, embset):

names, X = embset.to_names_X()

# We are re-writing the transform method here because TSNE cannot .fit().transform().

# Check the docs here: https://scikit-learn.org/stable/modules/generated/sklearn.manifold.TSNE.html#sklearn.manifold.TSNE

new_vecs = self.tfm.fit_transform(X)

new_dict = new_embedding_dict(names, new_vecs, embset)

return EmbeddingSet(new_dict,

name=f"{embset.name}.tsne({self.n_components})")

def transform(self, embset):

names, X = embset.to_names_X()

np.random.seed(self.seed)

new_vecs = self.tfm.transform(X)

new_dict = new_embedding_dict(names, new_vecs, embset)

return EmbeddingSet(

new_dict,

name=f"{embset.name}",

)

def transform(self, embset):

names, X = embset_to_X(embset=embset)

with warnings.catch_warnings():

warnings.simplefilter("ignore", category=NumbaPerformanceWarning)

new_vecs = self.tfm.transform(X)

names_out = names + [f"umap_{i}" for i in range(self.n_components)]

vectors_out = np.concatenate([new_vecs, np.eye(self.n_components)])

new_dict = new_embedding_dict(names_out, vectors_out, embset)

return EmbeddingSet(new_dict,

name=f"{embset.name}.umap_{self.n_components}()")

def transform(self, embset):

names, X = embset.to_names_X()

np.random.seed(self.seed)

orig_dict = embset.embeddings.copy()

new_dict = {

f"rand_{k}": Embedding(f"rand_{k}",

np.random.normal(0, self.sigma, X.shape[1]))

for k in range(self.n)

}

return EmbeddingSet({**orig_dict, **new_dict})

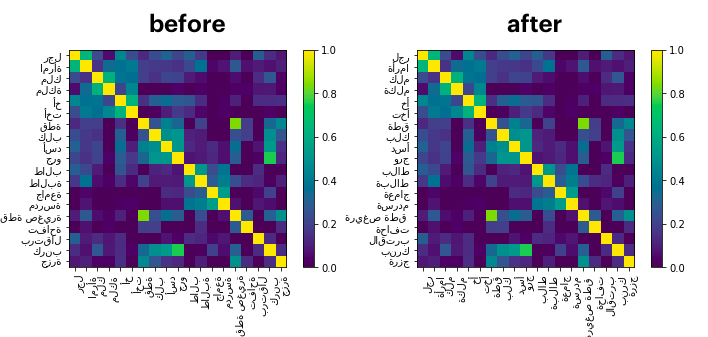

def reverse_strings(embset):

"""

This helper will reverse the strings in the embeddingset. This can be useful

for making matplotlib plots with Arabic texts.

This helper is meant to be used via `EmbeddingSet.pipe()`.

Arguments:

embset: EmbeddingSet to adapt

Usage:

```python

from whatlies.helpers import reverse_strings

from whatlies.language import BytePairLanguage

translation = {

"man":"رجل",

"woman":"امرأة",

"king":"ملك",

"queen":"ملكة",

"brother":"أخ",

"sister":"أخت",

"cat":"قطة",

"dog":"كلب",

"lion":"أسد",

"puppy":"جرو",

"male student":"طالب",

"female student":"طالبة",

"university":"جامعة",

"school":"مدرسة",

"kitten":" قطة صغيرة",

"apple" : "تفاحة",

"orange" : "برتقال",

"cabbage" : "كرنب",

"carrot" : "جزرة"

}

lang_cv = BytePairLanguage("ar")

arabic_words = list(words.values())

# before

lang_cv[translation].plot_similarity()

# after

lang_cv[translation].pipe(reverse_strings).plot_similarity()

```

"""

return EmbeddingSet(

*[Embedding(name=e.name[::-1], vector=e.vector) for e in embset])

def __getitem__(self, item):

"""

Retreive a single embedding or a set of embeddings. If an embedding contains multiple

sub-tokens then we'll average them before retreival.

Arguments:

item: single string or list of strings

**Usage**

```python

> lang = BytePairLang(lang="en")

> lang['python']

> lang[['python', 'snake']]

> lang[['nobody expects', 'the spanish inquisition']]

```

"""

if isinstance(item, str):

return Embedding(item, self.module.embed(item).mean(axis=0))

if isinstance(item, list):

return EmbeddingSet(*[self[i] for i in item])

raise ValueError(f"Item must be list of string got {item}.")

def test_embset_creation_warning():

foo = Embedding("foo", [0, 1])

# This vector has the same name dimension. Dangerzone.

bar = Embedding("foo", [1, 2])

with pytest.raises(Warning):

EmbeddingSet(foo, bar)

def emb():

x = Embedding("x", [0.0, 1.0])

y = Embedding("y", [1.0, 0.0])

z = Embedding("z", [0.5, 0.5])

return EmbeddingSet(x, y, z)

def transform(self, embset):

names, X = embset.to_names_X()

new_vecs = self.tfm.transform(X)

new_dict = new_embedding_dict(names, new_vecs, embset)

return EmbeddingSet(new_dict, name=f"{embset.name}.ivis_{self.n_components}()")

def __getitem__(self, query):

if isinstance(query, str):

return Embedding(query, vector=self.model.encode(query))

else:

return EmbeddingSet(*[self[tok] for tok in query])

def test_add_property():

foo = Embedding("foo", [0.1, 0.3, 0.10])

bar = Embedding("bar", [0.7, 0.2, 0.11])

emb = EmbeddingSet(foo, bar)

emb_with_property = emb.add_property("prop_a", lambda d: "prop-one")

assert all([e.prop_a == "prop-one" for e in emb_with_property])

def test_embset_creation_error():

foo = Embedding("foo", [0, 1])

# This vector has a different dimension. No bueno.

bar = Embedding("bar", [1, 1, 2])

with pytest.raises(ValueError):

EmbeddingSet(foo, bar)

def transform(self, embset):

names, X = embset.to_names_X()

new_vecs = np.array(self.emb.transform(X))

new_dict = new_embedding_dict(names, new_vecs, embset)

return EmbeddingSet(new_dict, name=f"{embset.name}.tsne_{self.n_components}()")

st.markdown("# Simple Text Clustering")

st.markdown(

"Let's say you've gotten a lot of feedback from clients on different channels. You might like to be able to distill main topics and get an overview. It might even inspire some intents that will be used in a virtual assistant!"

)

st.markdown(

"This tool will help you discover them. This app will attempt to cluster whatever text you give it. The chart will try to clump text together and you can explore underlying patterns."

)

if method == "CountVector SVD":

lang = CountVectorLanguage(n_svd, ngram_range=(min_ngram, max_ngram))

embset = lang[texts]

if method == "Lite Sentence Encoding":

embset = EmbeddingSet(

*[

Embedding(t, v)

for t, v in zip(texts, calculate_embeddings(texts, encodings=encodings))

]

)

p = (

embset.transform(reduction)

.plot_interactive(annot=False)

.properties(width=500, height=500, title="")

)

st.write(p)

st.markdown(

"While the tool helps you in discovering clusters, it doesn't do labelling (yet). We do offer a [jupyter notebook](https://github.com/RasaHQ/rasalit/tree/master/notebooks/bulk-labelling) that might help out though."

)

def transform(self, embset: EmbeddingSet) -> EmbeddingSet:

names, X = embset.to_names_X()

axis = 0 if self.feature else 1

X = normalize(X, norm=self.norm, axis=axis)

new_dict = new_embedding_dict(names, X, embset)

return EmbeddingSet(new_dict, name=embset.name)

To do : None

"""

# %% load libraries

from whatlies import EmbeddingSet

from whatlies.language import SpacyLanguage

# %% load a model of the language 'via' whatlies

lang = SpacyLanguage("en_core_web_lg")

# %% create a list of lexical items

"""

let's see how animals (actors) map onto qualities (attributes)

"""

# sample animals

animals = ["cat", "dog", "mouse"]

# sample qualities

qualities = ["responsive", "loyal"]

# set of lexical items

items = animals + qualities

# %% browse the loaded model of the language, retrieve the vectors

# and create and initialize an embedding sets (a class specific

# to the library whatlies)

emb = EmbeddingSet(*[lang[item] for item in items])

# the position of animals should be relative to the word vectors

# for 'smart' and 'loyal'

emb.plot_interactive(x_axis=emb["responsive"], y_axis=emb["loyal"])