def create_df(self, product, year, place_type, place, level, variables):

if product == 'Decennial2010':

cen_prod = products.Decennial2010()

elif product == 'ACS' and year:

cen_prod = products.ACS(year)

elif product == 'ACS':

cen_prod = products.ACS()

place_mapper = {

'msa': cen_prod.from_msa,

'csa': cen_prod.from_csa,

'county': cen_prod.from_county,

'state': cen_prod.from_state,

'placename': cen_prod.from_place

}

ui.item(("Retrieving variables %s for all %ss in %s. " +

"This can take some time for large datasets.") % \

(variables, level, place))

print()

print(place, level, variables)

df = place_mapper[place_type](place, level=level, variables=variables)

df.columns = [utils.clean_string(x) for x in df.columns]

return df

def test_all():

from cenpy import products

# In[2]:

chicago = products.ACS(2017).from_place(

"Chicago, IL", level="tract", variables=["B00002*", "B01002H_001E"])

# In[3]:

# Install it the prerelease candidate using:

#

# ```

# pip install --pre cenpy

# ```

#

# I plan to make a full 1.0 release in July. File bugs, rough edges, things you want me to know about, and interesting behavior at [https://github.com/ljwolf/cenpy](https://github.com/ljwolf/cenpy)! I'll also maintain a roadmap [here](https://github.com/ljwolf/cenpy/milestone/2).

# # Cenpy 1.0.0

#

# Cenpy started as an interface to explore and query the US Census API and return Pandas Dataframes. This was mainly intended as a wrapper over the basic functionality provided by the census bureau. I was initially inspired by `acs.R` in its functionality and structure. In addition to `cenpy`, a few other census packages exist out there in the Python ecosystem, such as:

#

# - [datamade/census](https://github.com/datamade/census) - "a python wrapper for the US Census API"

# - [jtleider/censusdata](https://github.com/jtleider/censusdata) - "download data from Census API"

#

# And, I've also heard/seen folks use `requests` raw on the Census API to extract the data they want.

#

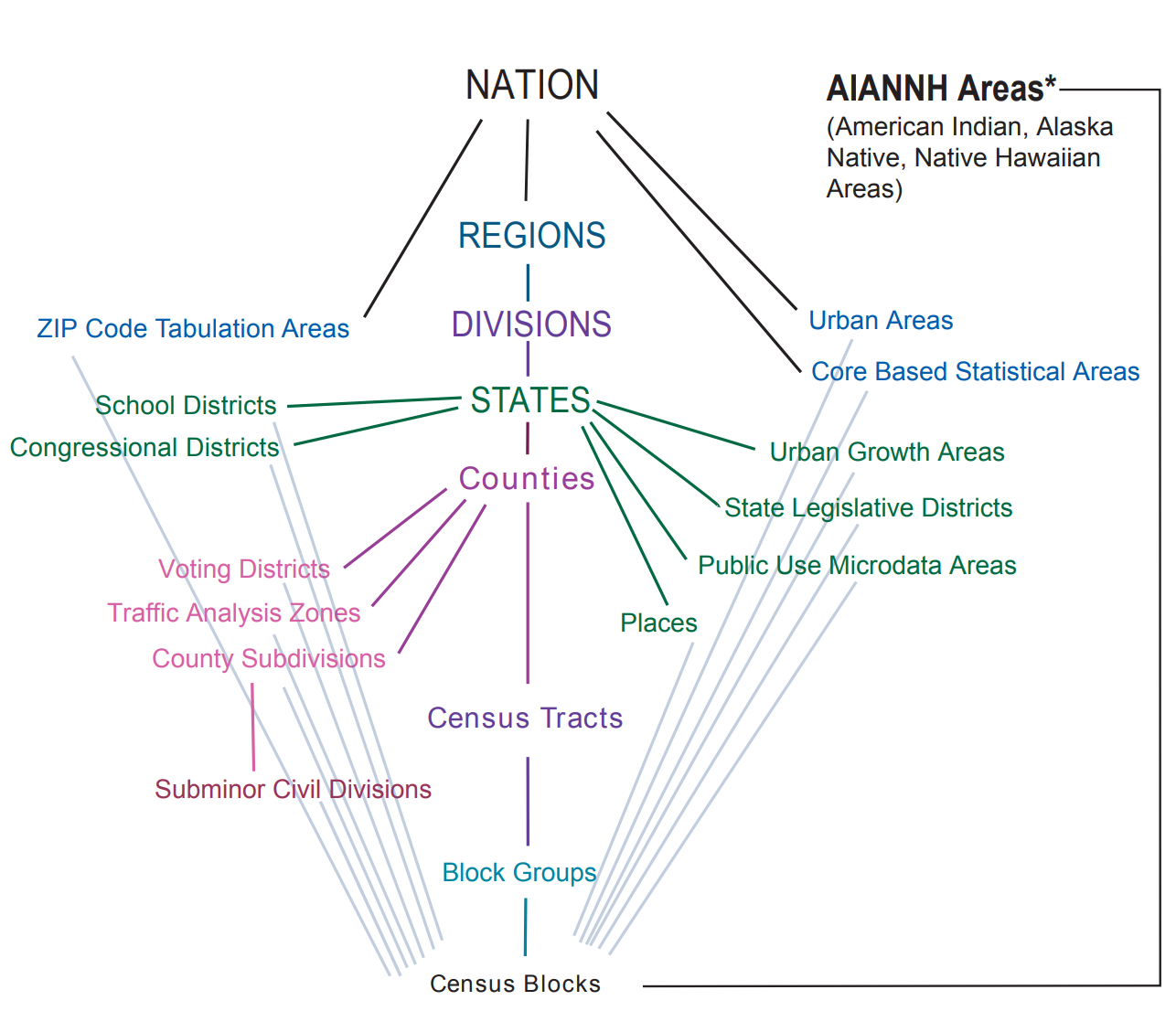

# All of the packages I've seen (including `cenpy` itself) involved a very stilted/specific API query due to the way the census API worked. Basically, it's difficult to construct an efficienty query against the census API without knowing the so-called "geographic hierarchy" in which your query fell:

#

#

# The main census API does not allow a user to leave middle levels of the hierarchy vague: For you to get a collection of census tracts in a state, you need to query for all the *counties* in that state, then express your query about tracts in terms of a query about all the tracts in those counties. Even `tidycensus` in `R` [requires this in many common cases](https://walkerke.github.io/tidycensus/articles/basic-usage.html#geography-in-tidycensus).

#

# Say, to ask for all the blocks in Arizona, you'd need to send a few separate queries:

# ```

# what are the counties in Arizona?

# what are the tracts in all of these counties?

# what are the blocks in all of these tracts in all of these counties?

# ```

# This was necessary because of the way the hierarchy diagram (shown above) is structured. Blocks don't have a unique identifier outside of their own tract; if you ask for block `001010`, there might be a bunch of blocks around the country that match that identifier. Sometimes, this meant conducting a very large number of repetitive queries, since the packages are trying to build out a correct search tree hierarchy. This style of [tree search](https://en.wikipedia.org/wiki/Breadth-first_search) is relatively slow, especially when conducting this search over the internet...

#

# So, if we focus on the geo-in-geo style queries using the hierarchy above, we're in a tough spot if we want to *also* make the API easy for humans to use.

#

# # Enter Geographies

#

# Fortunately for us, a *geographic information system* can figure out these kinds of nesting relationships without having to know each of the levels above or below. This lets us use very natural query types, like:

# ```

# what are the blocks *within* Arizona?

# ```

# There is a *geographic information system* that `cenpy` had access to, called the Tiger Web Mapping Service. These are ESRI Mapservices that allow for a fairly complex set of queries to extract information. But, in general, neither [`census`](https://github.com/datamade/census/pull/33) nor `censusdata` used the TIGER web map service API. Cenpy's `cenpy.tiger` was a [fully-featured wrapper around the ESRI Mapservice](https://nbviewer.jupyter.org/gist/dfolch/2440ba28c2ddf5192ad7#5.-Pull-down-the-geometry), but was mainly *not* used by the package itself to solve this tricky problem of building many queries to solve the `geo-in-geo` problem.

#

# Instead, `cenpy1.0.0` uses the TIGER Web mapping service to intelligently get *all* the required geographies, and then queries for those geographies in a very parsimonious way. This means that, instead of tying our user interface to the census's datastructures, we can have some much more natural place-based query styles.

# # For instance

# Let's grab all the tracts in Los Angeles. And, let's get the Race table, `P004`.

# The new `cenpy` API revolves around *products*, which integrate the geographic and the data APIs together. For starters, we'll use the 2010 Decennial API:

# In[5]:

dectest = products.Decennial2010()

# Now, since we don't need to worry about entering geo-in-geo structures for our queries, we can request Race data for all the tracts in Los Angeles County using the following method:

# In[6]:

la = dectest.from_county("Los Angeles, CA",

level="tract",

variables=["^P004"])

# And, making a pretty plot of the Hispanic population in LA:

# In[7]:

# How this *works* from a software perspective is a significant imporvement on how the other packages, like `cenpy` itself, work.

# 1. Take the name the user provided and find a match `target` within a level of the census geography. *(e.g. match Los Angeles, CA to Los Angeles County)*

# 2. **Using the Web Mapping Service,** find all the tracts that fall within our `target`.

# 3. **Using the data API,** query for all the data about those tracts that are requested.

#

# Since the Web Mapping Service provides us all the information needed to build a complete geo-in-geo query, we don't need to use repeated queries. Further, since we are using *spatial querying* to do the heavy lifting, there's no need for the user to specify a detailed geo-in-geo hierarchy: using the [Census GIS](https://tigerweb.geo.census.gov/tigerwebmain/tigerweb_restmapservice.html), we can build the hierarchy for free.

# Thus, this even works for grabbing `block` information over a very large area, such as the Austin, TX MSA:

# In[8]:

aus = dectest.from_msa("Carson City, NV",

level="block",

variables=["^P003", "P001001"])

# In[9]:

# Or, for example, a case that's difficult to deal with: census geographies that span two states. Let's just grab all the tracts in the Kansas City consolidated statistical area, regardless of which state they fall into:

# In[11]:

ks = dectest.from_csa("Kansas City", level="tract", variables=["P001001"])

# In[12]:

# ## A bit of the weeds

#

# Thus, now `cenpy` has a very simple interface to grab just the data you want and get out of your way. But, there are a few additional helper functions to make it simple to work with data.

#

# For instance, it's possible to extract the boundary of the *target* boundary using the `return_bounds` argument:

# In[13]:

ma, ma_bounds = dectest.from_state("Massachusetts", return_bounds=True)

# In[14]:

# And, because some kinds of census geometries do not nest neatly within one another, it's possible to request that the "within" part of the geo-in-geo operation is relaxed to only consider geometries that *intersect* with the requested place:

# In[15]:

tuc, tuc_bounds = dectest.from_place("Tucson, AZ",

level="tract",

return_bounds=True,

strict_within=False)

# In[16]:

# # Additional Products

#

# This works for all of the ACSs that are supported by the Web Mapping Service. This means that `cenpy` supports this place-based API for the results from 2017 to 2019:

# In[17]:

for year in range(2017, 2020):

print(year)

acs = products.ACS(year=year)

acs.from_place("Tucson, AZ")

# And it has the same general structure as we saw before:

# In[18]:

sf = products.ACS(2017).from_place("San Francisco, CA",

level="tract",

variables=["B00002*", "B01002H_001E"])

def fetch_acs(

state="all",

level="tract",

year=2017,

output_dir=None,

skip_existing=True,

return_geometry=True,

process_vars=True

):

"""Collect the variables defined in `geosnap.datasets.codebook` from the Census API.

Parameters

----------

level : str

Census geographic tabulation unit e.g. "block", "tract", or "county"

(the default is 'tract').

state : str

State for which data should be collected, e.g. "Maryland".

if 'all' (default) the function will loop through each state and return

a combined dataframe.

year : int

ACS release year to query (the default is 2017).

output_dir : str

Directory that intermediate parquet files will be written to. This is useful

if the data request is large and the connection to the Census API fails while

building the entire query.

skip_existing : bool

If caching files to disk, whether to overwrite existing files or skip them

return_geometry : bool

whether to return geometry data from the Census API

Returns

-------

pandas.DataFrame or geopandas.GeoDataFrame

Dataframe or GeoDataFrame containing variables from the geosnap codebook

Examples

-------

>>> dc = fetch_acs('District of Columbia', year=2015)

"""

from .._data import datasets

states = datasets.states()

_variables = datasets.codebook().copy()

acsvars = _process_columns(_variables["acs"].dropna())

if state == "all":

dfs = []

with tqdm(total=len(states), file=sys.stdout) as pbar:

for state in states.sort_values(by="name").name.tolist():

fname = state.replace(" ", "_")

pth = Path(output_dir, f"{fname}.parquet")

if skip_existing and pth.exists():

print(f"skipping {fname}")

pass

else:

try:

df = products.ACS(year).from_state(

state=state,

level=level,

variables=acsvars.copy(),

return_geometry=return_geometry,

)

if process_vars:

processed = process_acs(df)

if return_geometry:

processed['geometry'] = df.geometry

df = gpd.GeoDataFrame(processed)

dfs.append(df)

if output_dir:

df.to_parquet(pth)

except:

tqdm.write("{state} failed".format(state=state))

pbar.update(1)

df = pandas.concat(dfs)

else:

df = products.ACS(year).from_state(

state=state,

level=level,

variables=acsvars.copy(),

return_geometry=return_geometry,

)

fname = state.replace(" ", "_")

pth = Path(output_dir, f"{fname}.parquet")

if process_vars:

processed = process_acs(df)

if return_geometry:

processed['geometry'] = df.geometry

df = gpd.GeoDataFrame(processed)

df.to_parquet(pth)

return df

# acs.variables # lists ALL the column names (over 25k)

# acs.tables # lists ALL the tables (over 600)

# acs.filter_tables('RACE', by='description') # filter the tables by a keyword

# acs.filter_variables('B01003') # filter variables by a table ID

# get census data

censusTracts = products.ACS(2017).from_csa(

'Washington-Arlington-Alexandria',

level='tract',

variables=[

'B01003_001E', # total population

'B19013_001E', # household median income last 12 months

'B02001_001E', # race: total

'B02001_002E', # white

'B08301_001E', # means of transportation to work: total

'B08301_010E', # transit

'B08301_019E', # walking

'B08301_018E', # bicycling

'B15003_001E', # educational attainment: total

'B15003_022E', # bachelor's degree

'B15003_023E', # master's degree

'B15003_024E', # professional degree

'B15003_025E', # doctoral degree

'C24050_006E' # industry by occupation for the civilian population: retail trade

])

# calculate percentage of nonwhite residents

censusTracts['P_NOWHITE'] = (

censusTracts['B02001_001E'] -

censusTracts['B02001_002E']) / censusTracts['B02001_001E']

# calculate percentage alternative commuters