def __init__(self, in_ch, out_ch, strides=1):

super(Bottlenect, self).__init__()

self.conv1 = tf.keras.Sequential([

layers.Conv2D(out_ch, 1, 1, use_bias=False),

tf.keras.layers.BatchNormalization()

])

self.conv2 = layers.Conv2D(out_ch,

3,

strides,

padding="same",

use_bias=False)

self.bn2 = layers.BatchNormalization()

self.conv3 = layers.Conv2D(out_ch * self.expansion,

1,

1,

use_bias=False)

self.bn3 = layers.BatchNormalization()

if strides != 1 or in_ch != self.expansion * out_ch:

self.shortcut = tf.keras.Sequential([

layers(self.expansion * out_ch,

kernel_size=1,

strides=strides,

use_bias=False),

layers.BatchNormalization()

])

else:

self.shortcut = lambda x, _: x

def call(self, x, pre_img=None, pre_hm=None):

y = []

x = self.base_layer(x)

if pre_img is not None:

x = x + self.pre_img_layer(pre_img)

if pre_hm is not None:

x = x + self.pre_hm_layer(pre_hm)

for i in range(6):

layers = getattr(self, 'level{}'.format(i))

if isinstance(layers, tf.keras.Sequential) and layers.built:

for layer in layers.layers:

x = layer(x)

else:

x = layers(x)

y.append(x)

return y

def add_generator_block(old_model):

# weight initialization

init = RandomNormal(stddev=0.02)

# weight constraint

const = max_norm(1.0)

# get the end of the last block

block_end = old_model.layers[-2].output

# upsample, and define new block

upsampling = UpSampling2D()(block_end)

g = keras.layers(128, (3, 3),

padding='same',

kernel_initializer=init,

kernel_constraint=const)(upsampling)

g = PixelNormalization()(g)

g = LeakyReLU(alpha=0.2)(g)

g = keras.layers.Conv2D(128, (3, 3),

padding='same',

kernel_initializer=init,

kernel_constraint=const)(g)

g = PixelNormalization()(g)

g = LeakyReLU(alpha=0.2)(g)

# add new output layer

out_image = keras.layers.Conv2D(3, (1, 1),

padding='same',

kernel_initializer=init,

kernel_constraint=const)(g)

# define model

model1 = Model(old_model.input, out_image)

# get the output layer from old model

out_old = old_model.layers[-1]

# connect the upsampling to the old output layer

out_image2 = out_old(upsampling)

# define new output image as the weighted sum of the old and new models

merged = WeightedSum()([out_image2, out_image])

# define model

model2 = Model(old_model.input, merged)

return [model1, model2]

from tensorflow.keras import layers

# MaxPool2D()缩小图片 max_pooling2d ,还存在一个avera_pooling

x = tf.ones([1, 14, 14, 4])

pool = layers.MaxPool2D(2, strides=2)

out = pool(x)

print(out)

pool = layers.MaxPool2D(3, strides=2)

out = pool(x)

print(out)

out = tf.nn.max_pool2d(x, 2, strides=2, padding='VALID')

print(out.shape)

# 图片放大UpSampling2D

x = tf.random.normal([1, 17, 17, 4])

layers = layers.UpSampling2D(size=3)

out = layers(x)

print(out.shape)

# 使用relu函数 在两个工具包都存在relu函数 去掉特征

x = tf.random.normal([1, 7, 7, 3])

print(x)

x = tf.nn.relu(x)

print(x)

class MyModel(Model):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = tf.keras.layers.Conv2D(filters=32, kernel_size=3, activation='relu')

self.flatten = Flatten()

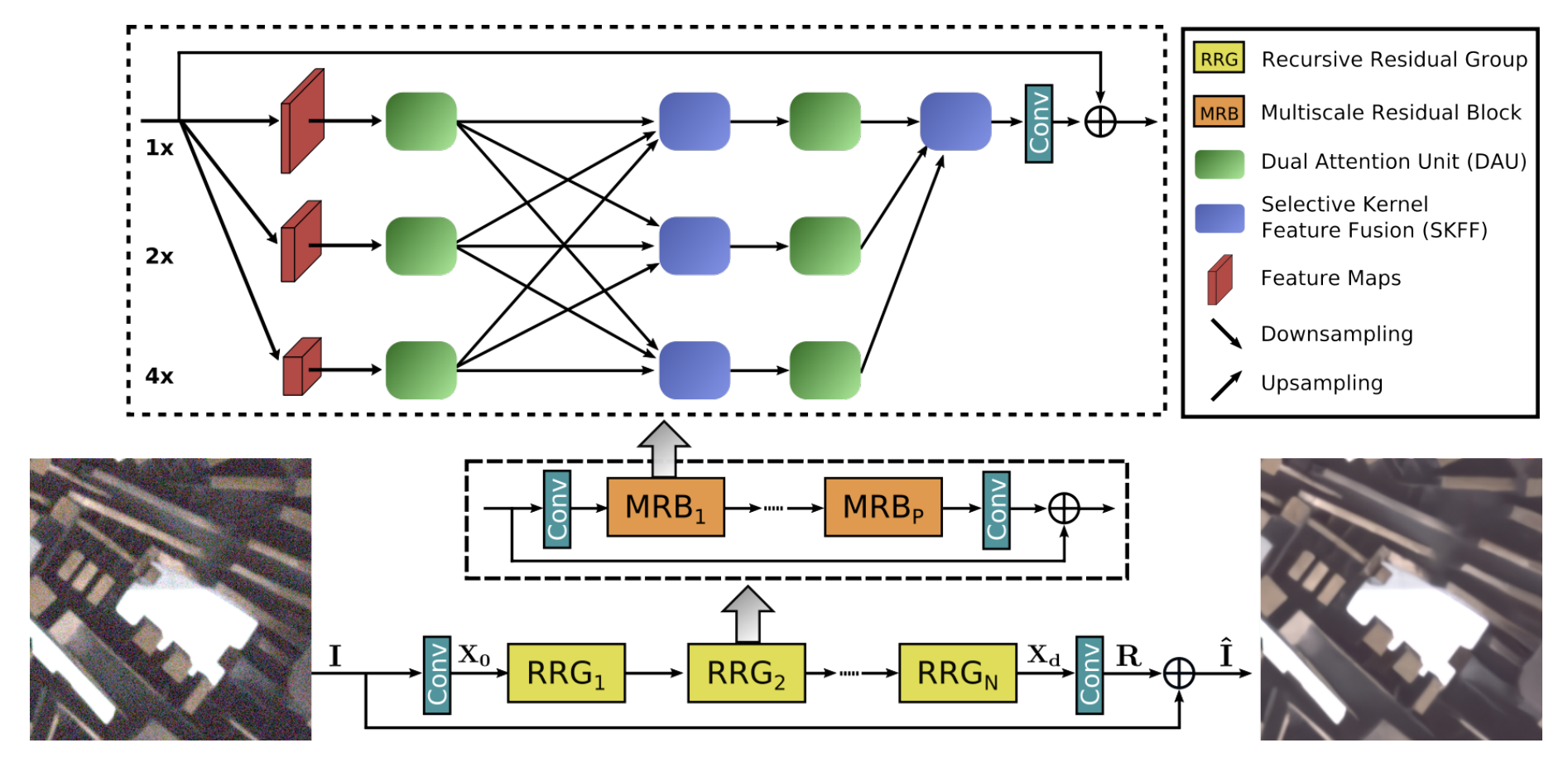

spatial scales, while maintaining the original high-resolution features to preserve precise spatial details. - A regularly repeated mechanism for information exchange, where the features across multi-resolution branches are progressively fused together for improved representation learning. - A new approach to fuse multi-scale features using a selective kernel network that dynamically combines variable receptive fields and faithfully preserves the original feature information at each spatial resolution. - A recursive residual design that progressively breaks down the input signal in order to simplify the overall learning process, and allows the construction of very deep networks.  """ """ ### Selective Kernel Feature Fusion The Selective Kernel Feature Fusion or SKFF module performs dynamic adjustment of receptive fields via two operations: **Fuse** and **Select**. The Fuse operator generates global feature descriptors by combining the information from multi-resolution streams. The Select operator uses these descriptors to recalibrate the feature maps (of different streams) followed by their aggregation. **Fuse**: The SKFF receives inputs from three parallel convolution streams carrying different scales of information. We first combine these multi-scale features using an element-wise sum, on which we apply Global Average Pooling (GAP) across the spatial dimension. Next, we apply a channel- downscaling convolution layer to generate a compact feature representation which passes through three parallel channel-upscaling convolution layers (one for each resolution stream) and provides us with three feature descriptors.

#%%

import tensorflow as tf

tf.keras.backend.clear_session()

def layers(inputs, units, activation=None):

l = tf.keras.layers.Dense(units, activation=activation)(inputs)

x = tf.concat([inputs, l], 1)

return l, x

inputs = tf.keras.Input(shape=(38))

_, x = layers(inputs, 20, tf.nn.leaky_relu)

_, x = layers(x, 20, tf.nn.leaky_relu)

_, x = layers(x, 20, tf.nn.leaky_relu)

_, x = layers(x, 20, tf.nn.leaky_relu)

_, x = layers(x, 20, tf.nn.leaky_relu)

_, x = layers(x, 20, tf.nn.leaky_relu)

_, x = layers(x, 20, tf.nn.leaky_relu)

x1 = tf.keras.layers.Dense(1)(x)

model = tf.keras.Model(inputs, x1)

tf.keras.utils.plot_model(model, show_shapes=True, show_layer_names=True)

#%%

optimizer = tf.keras.optimizers.Adam(0.0002)

global_steps = 0

X = np.linspace(0, 1, 28)

x, y = np.meshgrid(X, X)